As someone who’s been fascinated by artificial intelligence for years now, I’ve always been intrigued by how neural networks are able to learn complicated patterns and make predictions. Recently I’ve been diving deeper into the actual mechanisms behind neural networks and one concept that’s helped everything click into place is the activation function. You might be wondering – what exactly is an activation function and why is it so important? Stick with me and I’ll do my best to explain in plain English!

Understanding the Activation Function in AI

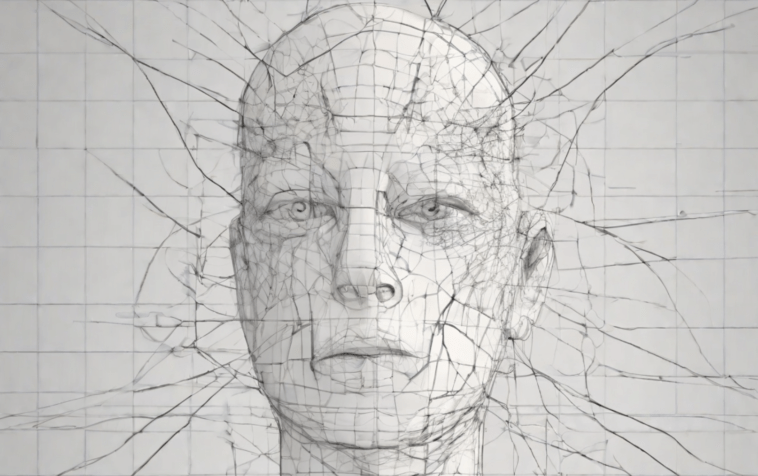

To understand activation functions, it helps to first understand the basic structure of an artificial neural network. At the core of any neural network are artificial neurons, which are inspired by the biological neurons in our own brains. These artificial neurons are connected to each other similar to how neurons communicate in the brain via synapses. Each connection between neurons can strengthen or weaken based on inputs, much like synapses change in the brain during learning and memory formation.

So if these artificial neurons are the basic “thinking” units of a neural network, how do they actually process information? Well, each neuron receives input from all the neurons it’s connected to. These inputs each have a numerical weight assigned to them depending on how strong that connection is. The neuron will multiply each input by its associated weight, sum all those weighted inputs, and this sum becomes the neuron’s net input.

This is where the activation function comes in. Based on the net input it receives, the activation function determines how much this neuron will “fire”, or what its output signal will be. Essentially, it’s deciding how active or inactive this neuron should be based on its total net input. This output is then shared with other neurons that this neuron is connected to, and so on throughout the network.

Step Function

The most basic activation function is a step function. With a step function, if the net input surpasses a certain threshold value, the neuron “fires” or its output equals 1. If the net input is below the threshold value, the neuron does not fire and its output equals 0.

While simple, this on-off step function activation doesn’t allow for much nuance – a neuron is either fully on or fully off. Early neural networks used step functions with some success, but researchers found neural networks learned faster and could solve more complex problems using activation functions that provided a smoother, more analog output.

Sigmoid Function

One of the earliest and still widely used “smoothing” activation functions is the sigmoid function. With a sigmoid function, as the net input increases the neuron’s output value will smoothly transition from 0 to 1, never fully reaching either endpoint. Like a biological neuron that can fire at varying intensities, the sigmoid activation allows a neuron to be partially active rather than just fully on or off.

This continuous gradient of the sigmoid helps neural networks learn much more efficiently through backpropagation. Essentially, it allows the network to slightly “raise” or “lower” the weights on connections to neurons based on how far off the output was from the expected value. With a step function, the network wouldn’t know whether slightly increasing or decreasing weights helped or hurt – it was just right or wrong. The sigmoid provides a nice continuous spectrum to help neural networks fine-tune their weights over many iterations of training.

Activation functions like rectified linear units (ReLU) that have become even more popular than the sigmoid. But without the fundamental stepping stone of the continuous, squishy sigmoid function, I’m not sure neural networks would have taken off in the way they have. Hope this breakdown helps provide more insight into an often overlooked but critical piece of how artificial neural networks are able to learn complex patterns! Let me know if any part is still unclear.

The all-important Derivative

For activation functions to really help neural networks learn efficiently, they need one other crucial property – their derivatives need to be easy to calculate. This comes into play with backpropagation, the process neural networks use to update their weights based on the error between the predicted and actual outputs.

Under the hood, backpropagation involves taking partial derivatives of the loss function with respect to the weights to determine how much to adjust each weight. If the activation function doesn’t have a clean, straightforward derivative, this process of calculating gradients becomes extremely computationally expensive or impossible.

The sigmoid function checks this box nicely – its derivative is simply the function value times one minus the function value. Very easy for computers to calculate quickly. This makes it ideal for use in backpropagation. Some other common activation functions like tanh and ReLU also have simple derivatives that allow for efficient gradient computation during training.

Summary

So in summary, activation functions act as the switches that determine how active or inactive each artificial neuron is based on its total inputs. Beyond just providing an on/off output, the “squishy” nature of activation functions like sigmoid help neural networks learn patterns in analog rather than digital ways. And crucially, their easy-to-calculate derivatives are what empower the backpropagation algorithm and efficient learning via neural networks. Hope this gave some useful insights into these unsung heroes behind deep learning’s success!